Kinect-Based Real-Time Gesture Recognition Using Deep Convolutional Neural Networks for Touchless Visualization of Hepatic Anatomical Models in Surgery

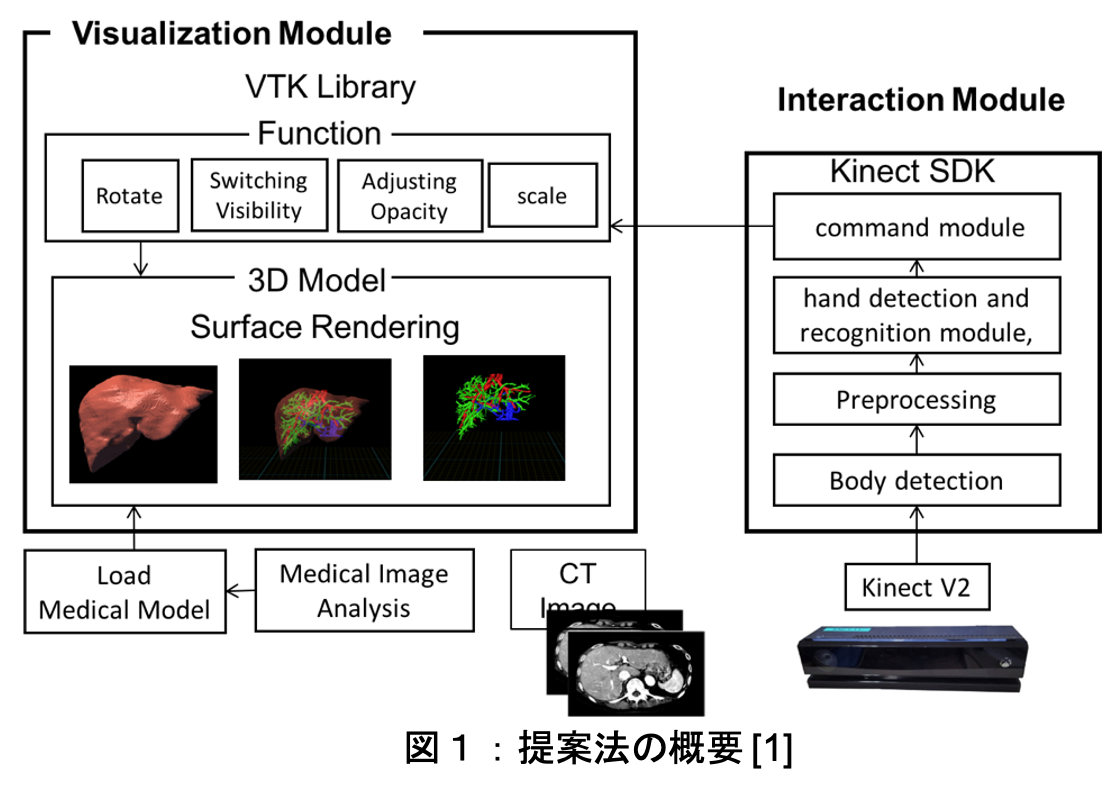

Visualization of three-dimensional (3D) medical images is an important tool in surgery, particularly during the operation. However, it is often challenging to review a 3D anatomic model while maintaining a sterile field in the operating room. Thus, there is a great interest in touchless interaction using hand gestures to reduce the risk of infection during surgery. In this research, we propose an improved real-time gesture-recognition method based on deep convolutional neural networks that works with a Microsoft Kinect device. A new multi-view RGB-D dataset consisting of 25 hand gestures was constructed for deep learning.

Kinectを用いたジェスチャー認識